Learn Azure Communication Services Day 16 â Diagnostic Network and Media Messages

This blog post is part of a series called “Learn ACS“, all about Microsoft Azure Communication Services. The series covers a high-level overview of capabilities and considerations, then dives into the development detail of using ACS in your application. Find the rest of the posts in the series at learnACS.dev.

There’s been some exciting new functionality added to Azure Communication Services recently and that warrents another Learn ACS blog post! This time we’re looking at diagnostic messages sent from ACS to the client. These can notify of common problems that result in a poor user experience: speaking whilst the mic is muted, a poor network connection, loosing access to the microphone or camera.

Your code can subscribe to these messages and decide how to present them to the user. You could choose just simply to log them and not say anything. Or, you could display them on the screen and add your own suggested remedies.

There are two types of message you can subscribe to – network messages and media messages. Each type has a single ‘diagnosticChanged’ event to subscribe to. Doing so means you’ll receive one or more events. Each event is a JSON blog with the following data values:

- mediaType – this is the type of diagnostic being events. For instance “Audio”, “Video” etc.

- diagnostic – this is the name of the diagnostic being evented. For instance “speakingWhileMicrophoneIsMuted” is a diagnostic that indicates someone is speaking whilst muted.

- valueType – this describes which object is used to describe the value of the diagnostic. There are currently two different types: DiagnosticQuality and DiagnosticFlag

- value – this is the actual value of the diagnostic, and is of the type described in valueType. If it’s a DiagnosticQuality object then the value is an emum, with possible values Good = 1, Poor = 2, Bad = 3. If it’s a DiagnosticFlag then the value is a true/false boolean.

Be aware that not all events raised are bad! If something gets fixed (like a user unmuted their mic) then a speakingWhileMicrophoneIsMuted event will be raised with a false value. This let you know that the issue has been resolved. Also, it’s worth knowing that not all events will work on all devices and operating systems. Some of the signals vary depending on the implementation of the media stack. For instance, some events aren’t available on Safari on iOS.

A full list of all the different diagnostics available is available on the Call Diagnostics section of the Calling SDK documentation.

This new functionality is actually implemented as a Call Feature Extension – which is a way of the ACS Team layering on additional functionality for those that want to consume it. It means importing a new Features component from @azure/communication-calling, but all of that is covered in the sample code for today.

Here’s the code: don’t forget, on Line 21 of client.js, replace the placeholder text with the full URL of your Azure Function created in Day 3, including the code parameter:

index.html

client.js

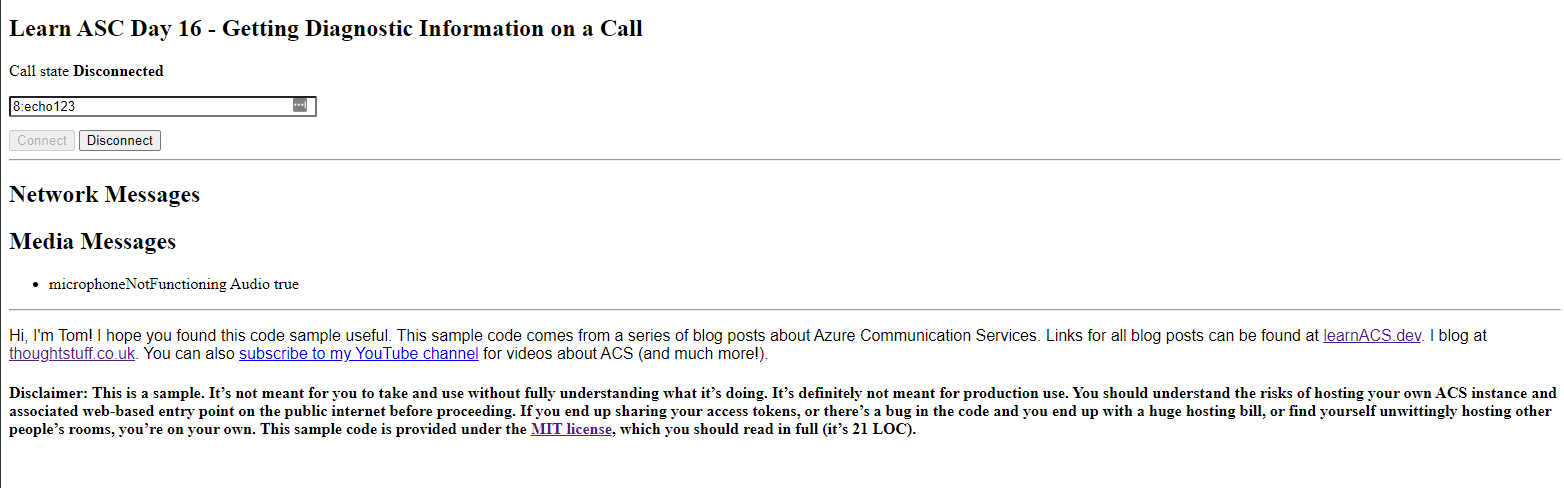

Testing it out

As before, use a command-line to run this command then browse to the sample application in your browser (usually http://localhost:8080):

[sourcecode]

npx webpack-dev-server –entry ./client.js –output bundle.js –debug –devtool inline-source-map

[/sourcecode]

The call connects to the Echo bot, which records and plays back your audio. You should have enough time on the call to try doing something to upset ACS, like disconnecting the audio device or speaking whilst muted:

What’s the code doing?

The key difference in this sample is the additional import:

import { Features } from “@azure/communication-calling”;

and then new subscriptions, one for network messages and one for media messages (lines 39 and 45). In each case the event handler simply prints out the diagnostic name, type and value into a list.

Today, we explored using the new diagnostic events in Azure Communication Services. Links for all blog posts can be found at learnACS.dev. You can also subscribe to my YouTube channel for videos about ACS (and much more!).