Microsoft Teams is getting video filters… and Teams Developers will be writing them?

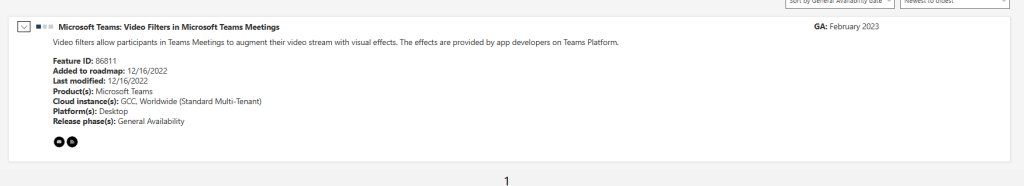

A new item has hit the Microsoft 365 roadmap, and it raises some questions.

The title and first line of the description are clear enough about what is coming:

Microsoft Teams: Video Filters in Microsoft Teams Meetings: Video filters allow participants in Teams Meetings to augment their video stream with visual effects.

Microsoft 365 Roadmap – See What’s Coming | Microsoft 365

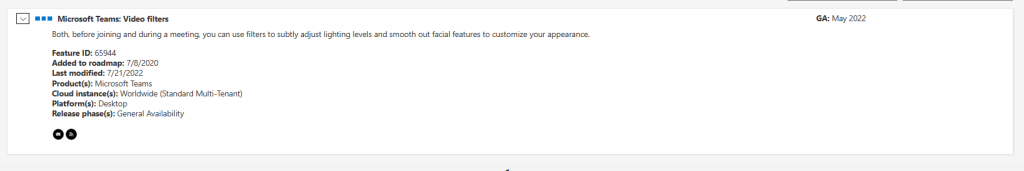

Exactly what sort of filters will be available, we don’t yet know. A previous roadmap item, now released, used the term “video filters” to describe the ability to “subtly adjust lighting levels and smooth out facial features”:

“Augment their video stream with visual effects” sounds a bit more extreme than just softening some wrinkles and adjusting for bad lighting though. We’re just going to have to wait and see on this, but with a GA forecast of February 2023 we won’t have that long to wait.

Developers, Developers, Developers

What’s more interesting right now, though, is the second sentence: “The effects are provided by app developers on Teams Platform“

What does that mean?

My reading of the sentence is that it is 3rd party application developers, building on the Teams Platform, who will be creating these effects.

If that’s true, then I have a whole lot more questions. How would this work? What extensibility points do we have today that would enable that to happen? Does this feature point to a new extension point for developers?

Today the existing video effects (and also Mesh for Teams avatars) are rendered locally on the client machine, not in the cloud. They mutate the video stream being sent to the cloud, that everyone else sees.

There really isn’t a good extensibility model for the client in Teams today: this is by design and would be complicated to introduce. Too many different clients, too much abstraction.

That’s what makes this roadmap item interesting. How will developers provide these effects, if they don’t have a way to extend the client with their own code?

Bots in the Cloud?

The only related developer extensibility I can think of right now is around what can be done with bots and video. On the Microsoft Teams Platform today, it is technically possible to host a bot that can take the video from a user, mutate it and play it back, in more-or-less real time. These are called application-hosted media bots and are usually used in IVR-type scenarios, where it’s important to be able to interact with the raw media – such as performing sentiment analysis on audio, or detecting objects in video frames.

With some modification to this approach, I suppose it’s possible to come up with something that would enable users to send their video to some hosted instance which would perform the modification and then send it back.

The biggest obstacle here though is scale and cost. This solution would require application developers to perform all the video rendering work on their servers, in the cloud. That’s going to be unsustainable without a non-trivial financial commitment, which I don’t see users making just for some fancy face effects.

It would also introduce a whole bunch of network traffic, latency and general poor performance to the experience of joining a Microsoft Teams meeting. So, I don’t think this is the approach that will be used.

Scene Studio?

One other possible place this might get implemented is in the existing Scene Studio in the Microsoft Teams Developer Portal. Right now, that lets developers create custom Together mode scenes by providing their own images and layouts.

Given the existing background blur technology that is already built into the Microsoft Teams client, presumably once the outline of a person has been identified, it’s possible to replace that part of a feed with an image, rather (or as well as) the background, so maybe we’ll see something like this built. However, I think this will rather limit the usefulness of this feature – I can’t see many uses in Microsoft Teams meetings where it’s desirable to replace one’s self with another image – especially with the advancements made around Mesh for Teams.

The wording of the roadmap item refers to “augmenting” the video stream. So, maybe rather than replacing any part of it, developers will be able to add one or more visual effects to the background, via the Scene Studio. Maybe a little shimmer on the company logo, or a shooting star in the sky behind you? Is it too much to dream for a dancing Clippy to add at the end of the conference table?!

I’ve asked Microsoft for clarification on what these visual effects are likely to be, who will be making them, and how. I’ll update this blog post if I learn more.